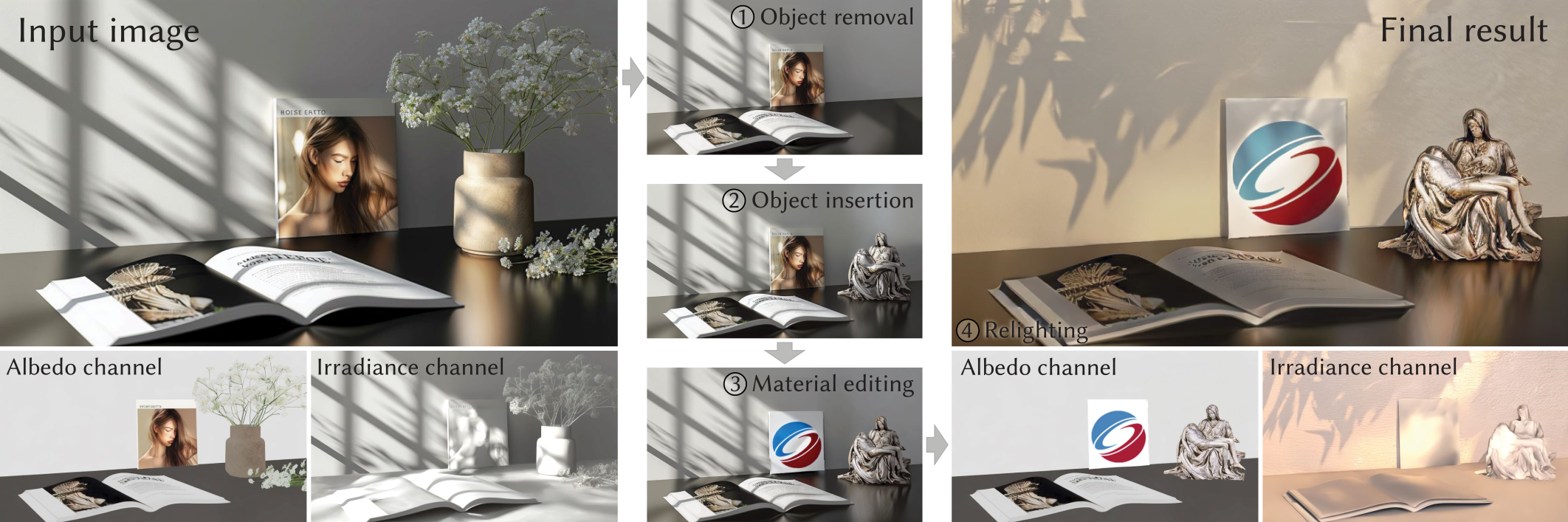

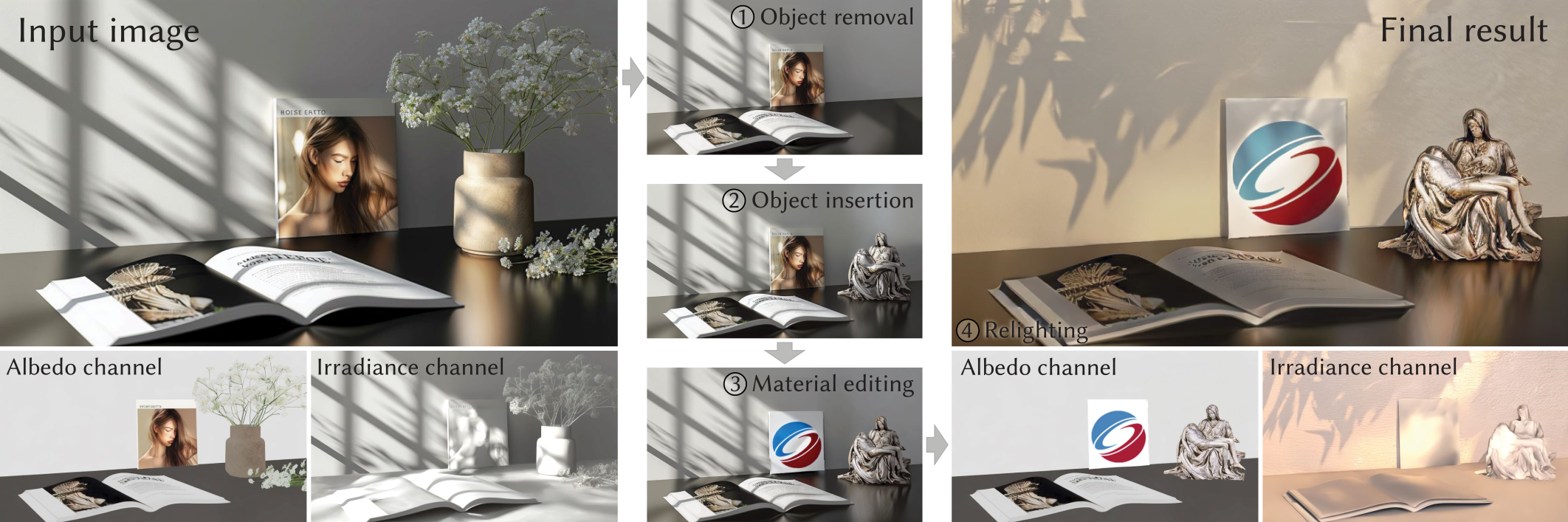

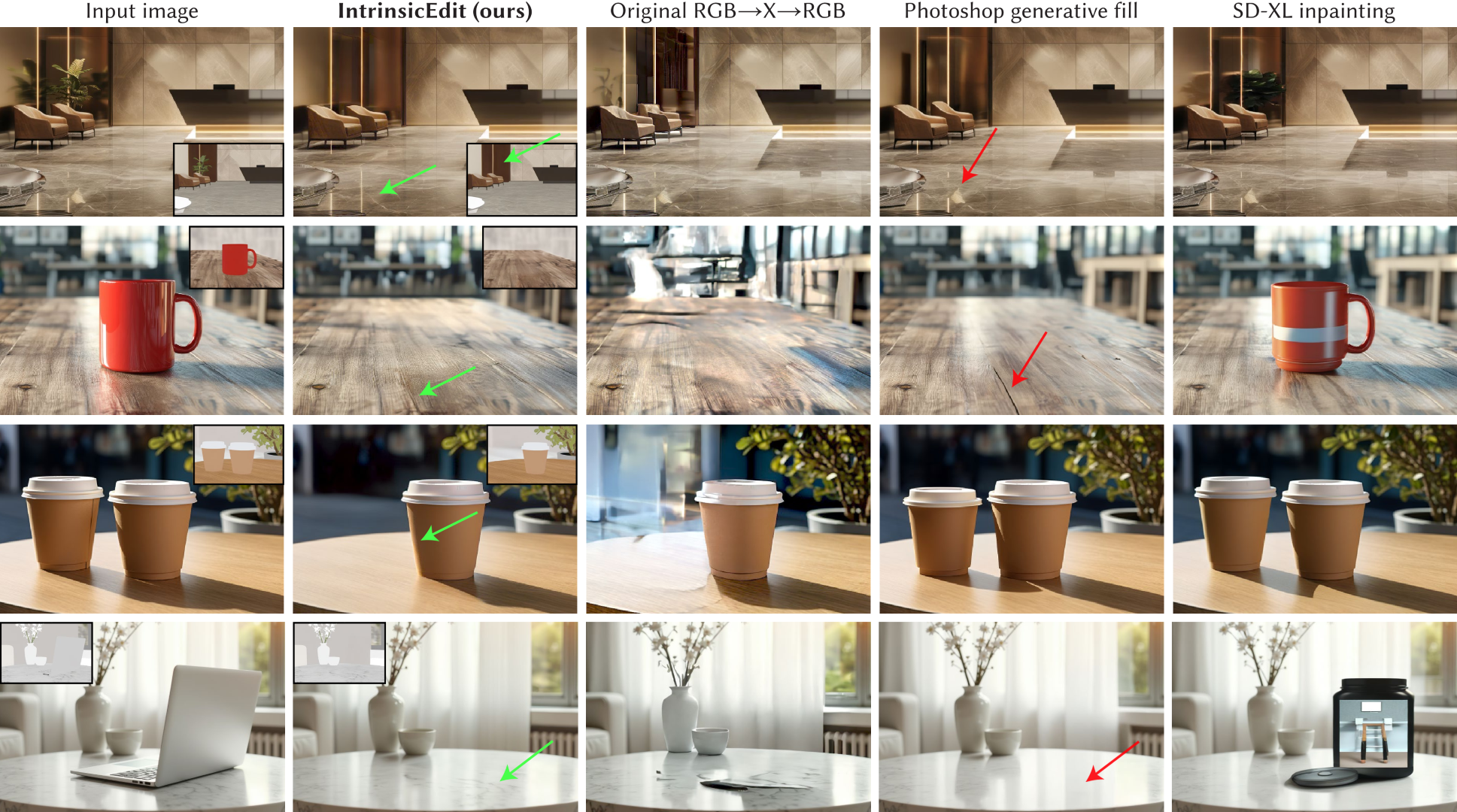

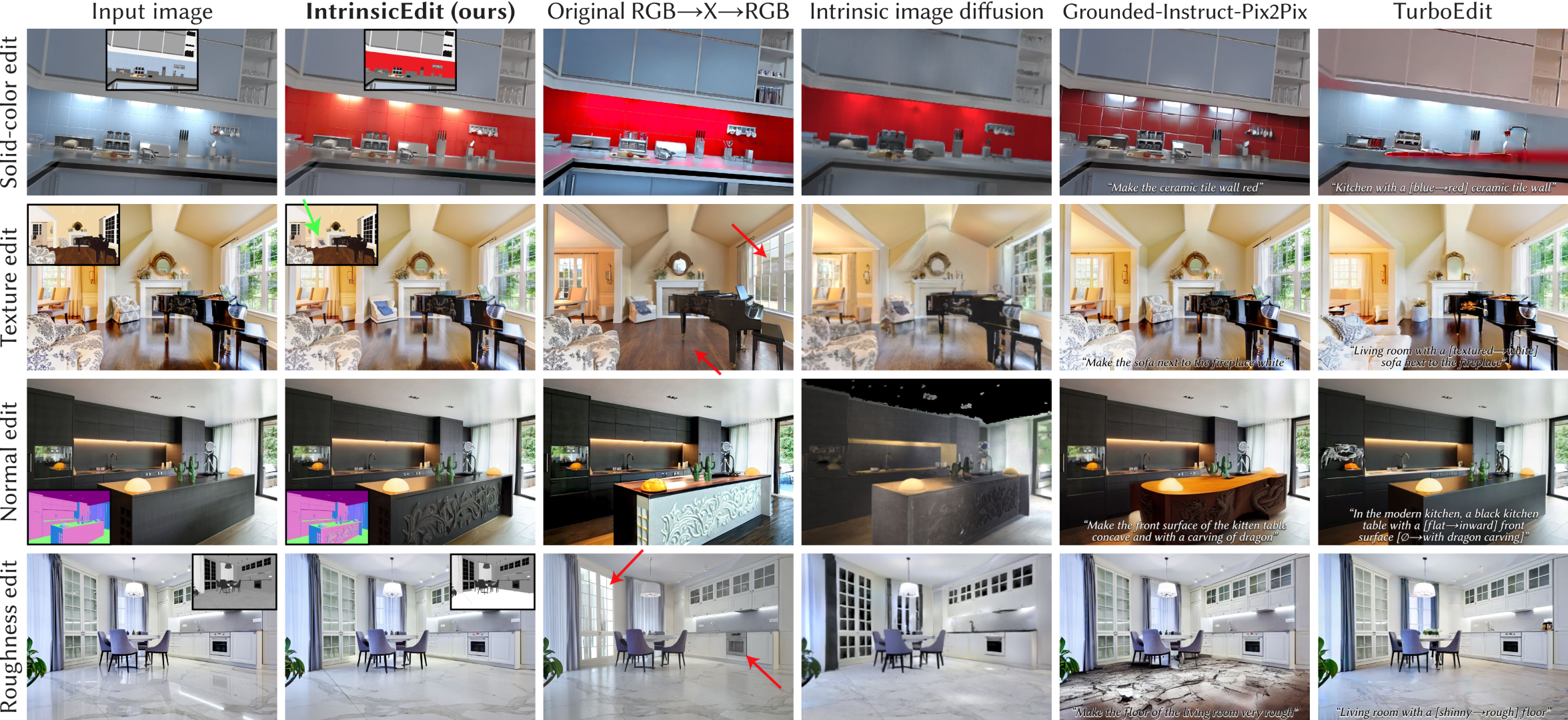

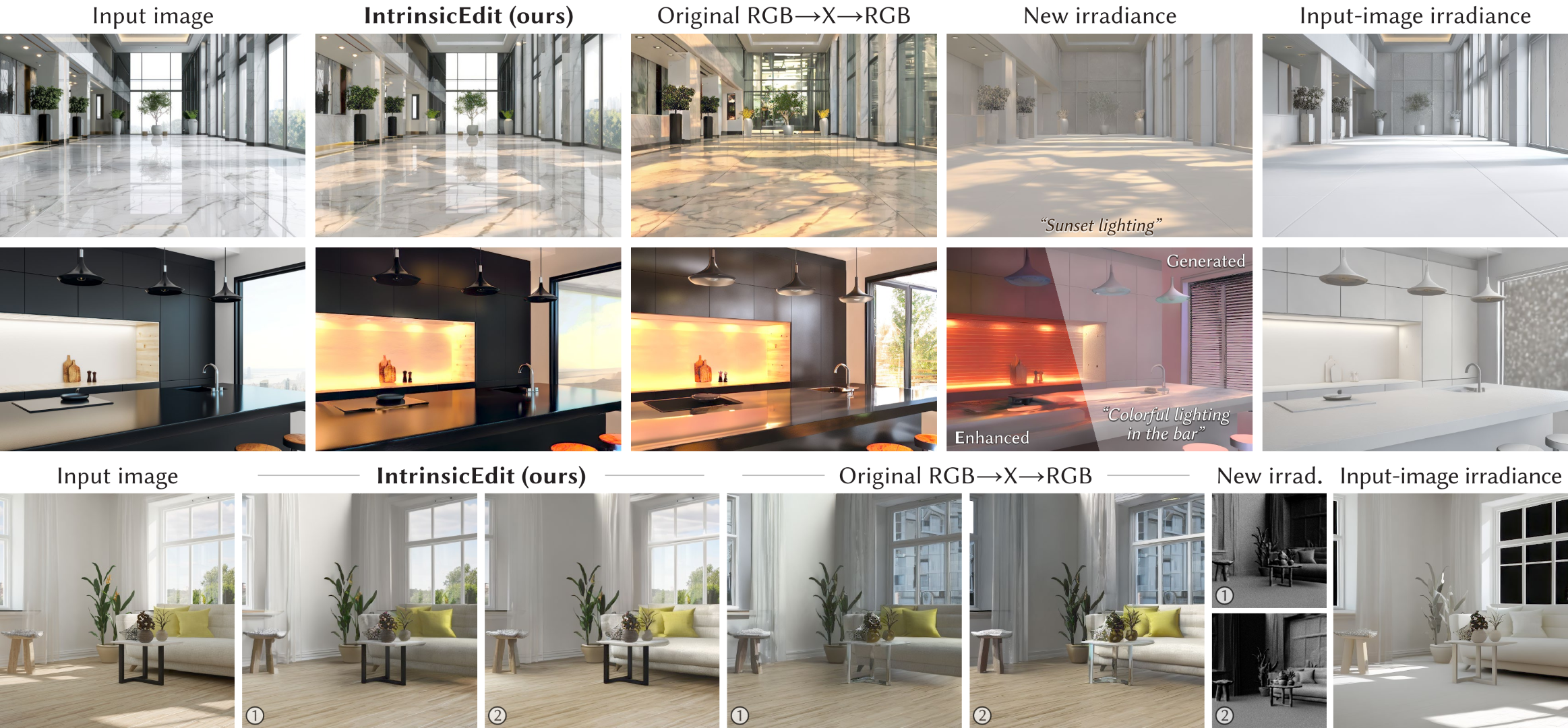

Generative diffusion models have advanced image editing with high-quality results and intuitive interfaces such as prompts and semantic drawing. However, these interfaces lack precise control, and the associated methods typically specialize on a single editing task. We introduce a versatile, generative workflow that operates in an intrinsic-image latent space, enabling semantic, local manipulation with pixel precision for a range of editing operations. Building atop the RGB-X diffusion framework, we address key challenges of identity preservation and intrinsic-channel entanglement. By incorporating exact diffusion inversion and disentangled channel manipulation, we enable precise, efficient editing with automatic resolution of global illumination effects -- all without additional data collection or model fine-tuning. We demonstrate state-of-the-art performance across a variety of tasks on complex images, including color and texture adjustments, object insertion and removal, global relighting, and their combinations.

@article{lyu2025intrinsic,

title={IntrinsicEdit: Precise generative image manipulation in intrinsic space},

author={Lyu, Linjie and Deschaintre, Valentin and Hold-Geoffroy, Yannick and Ha\v{s}an, Milo\v{s} and Yoon, Jae Shin and Leimk{\"u}ehler, Thomas and Theobalt, Christian and Georgiev, Iliyan},

journal={ACM Transactions on Graphics},

volume={44},

number={4},

year={2025}

}